Content remains the foundation of SEO—now and always.

Your potential customers discover your brand through search engines, where rankings hinge on the quality and originality of your published content. (Alongside countless other factors!)

But not all content holds the same weight.

Duplicate content has long been a headache for brands and marketers, sparking ongoing debates in the SEO world.

So, let’s break it down. This guide will dive into how duplicate content affects SEO and the best practices to keep your rankings intact.

Duplicate content refers to identical or nearly identical content appearing on multiple web pages—either within the same website or across different domains. This includes both exact copies and rewritten versions that closely resemble the original.

A common example? Republishing your blog post on Medium without a proper canonical tag linking back to the original source.

Another case? If the same webpage is accessible through multiple unique URLs on your site, search engines may treat them as duplicates.

That said, structural elements like headers, footers, and navigation menus don’t count as duplicate content, as they are essential parts of a website’s framework.

Duplicate content typically falls into two categories:

Exact copies – A single blog post published twice by mistake, each with a different URL.

Near-duplicate content – Not an exact replica, but close enough that search engines may still flag it.

Duplicate content poses several SEO challenges, making it a pressing concern for businesses, marketers, and SEO professionals.

Before diving into its impact on rankings, let’s break down the two main types of duplicate content:

Same-domain duplication – Occurs when similar or identical content exists on multiple pages within the same website.

Cross-domain duplication – Happens when the same content appears across different websites, such as when an article is republished elsewhere without proper attribution (also known as content syndication).

While cross-domain duplication can be managed with canonical tags, having duplicate pages within your own site is a bigger issue.

So, why exactly does duplicate content hurt SEO? Let’s see.

Google prioritizes indexing and ranking unique, high-value content. When duplicate content exists on your site, search engines may struggle to determine which version to rank—often choosing one that isn’t your preferred page.

This can lead to:

A drop in visibility for the page you actually want to rank.

Traffic being directed to a less-optimized version, affecting user engagement and conversions.

In short, duplicate content not only confuses search engines but also disrupts the user experience by sending visitors to pages that may not provide the best information or navigation flow.

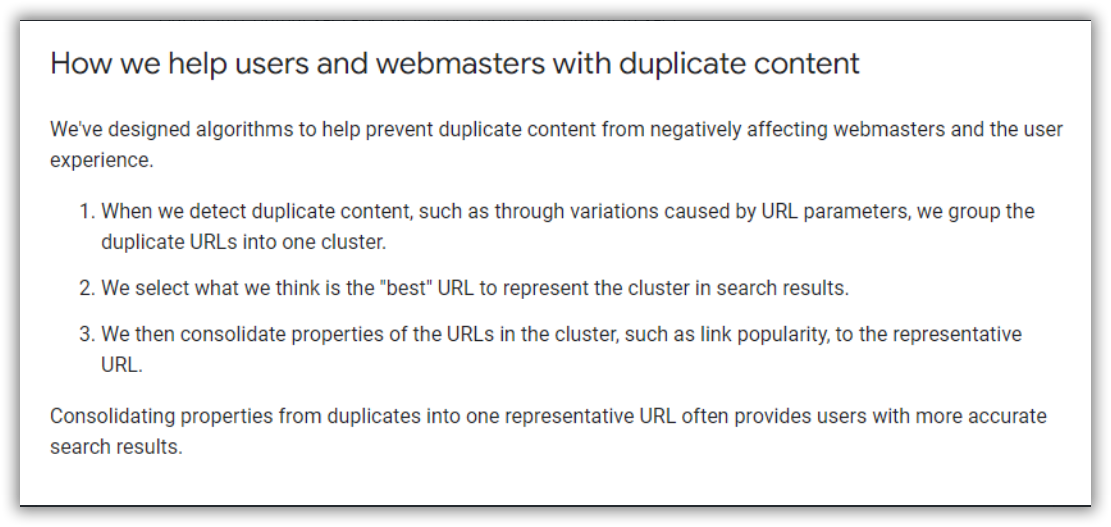

Here’s how Google puts it:

2. Competing Against Yourself

One of the biggest SEO pitfalls of duplicate content is self-competition—where your own pages end up battling for rankings, ultimately diluting your organic traffic.

This issue becomes even more problematic when duplicate content exists across multiple domains. Google may rank a syndicated version of your content higher than the original source, leading to unintended traffic shifts.

For example, an article originally published on its own site might later be republished on Medium. Even with minor rewording, if the structure and message remain largely the same, both versions can end up ranking in search results.

The result?

Traffic gets split between the two articles.

In some cases, the duplicate version outranks the original, diverting valuable visitors away from the intended source.

This kind of self-competition not only weakens your primary page’s ranking but also leads to another major problem…

3. Indexing Challenges

Every website has a limited crawl budget—Googlebot only spends so much time and resources scanning your pages before moving on. If your site has multiple duplicate pages, Googlebot wastes time crawling redundant content instead of indexing fresh, valuable pages.

This is particularly problematic for larger sites with thousands of URLs, where excessive duplication can slow down indexing for new content. If your crawl budget is exhausted, important pages might take longer to appear in search results—or worse, not get indexed at all.

For sites that rely on timely content—like news platforms or seasonal businesses—this inefficiency can be a major setback. If an article isn't indexed quickly, it loses its relevance, meaning lost opportunities for traffic and engagement.

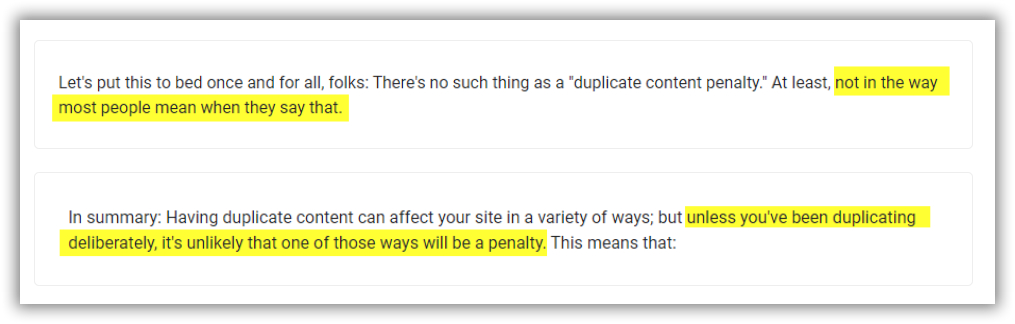

4. Risk of Penalties

While Google rarely issues direct penalties for duplicate content, it doesn’t mean they’re off the table. If your site is found to be deliberately duplicating content in a manipulative way, Google can take action—including de-indexing your pages or, in extreme cases, removing your site from search results entirely.

That’s a worst-case scenario, but it’s a risk no business can afford to take.

Beyond SEO concerns, duplicate content also impacts brand credibility and user experience. It’s not just about avoiding duplicate pages on your own site—you also need to protect your content from being scraped and republished elsewhere without permission.

To keep your content strategy clean and search-friendly, here are the best practices for managing, preventing, and resolving duplicate content across your own site and external domains.

A canonical tag is a simple yet powerful HTML element that tells search engines which version of a page should be considered the original. It helps prevent duplicate content issues by consolidating ranking signals and ensuring that the right page appears in search results.

Even if you don’t think your site has duplicate content, it’s best practice to use canonical tags proactively. Many websites unintentionally create duplicate pages through URL variations, such as search filters on e-commerce stores or category pages in blogs.

Redirecting duplicate pages to the original version is an effective way to eliminate duplicate content while preserving SEO value.

A 301 redirect signals to search engines that a page has permanently moved to a new location. This ensures that both users and crawlers are automatically directed to the correct version, consolidating link equity and preventing ranking dilution.

Over time, as your blog grows, you might end up with multiple posts covering nearly identical topics. This can create unintentional duplication, competing pages, and diluted rankings. The best solution? Merge and consolidate similar content into a single, stronger post.

For large websites with thousands of articles, content consolidation is an effective way to streamline indexing, optimize crawl budget, and boost search visibility.

4. Conduct Regular Content Audits

Preventing duplicate content starts with consistent content audits. A thorough audit helps you identify overlapping topics, outdated posts, and opportunities for consolidation—ensuring you don’t accidentally publish content that already exists on your site. Proactive content audits aren’t just a best practice—they’re a necessity to keep your site lean, optimized, and free from duplicate content issues.

5. Syndicate Content Strategically

Content syndication—republishing your content on multiple websites—is a powerful way to expand reach, build authority, and drive traffic. But syndication needs strategy. Without careful execution, it can lead to duplicate content issues, splitting traffic, and diluting SEO impact.

Done right, syndication amplifies your reach without undermining your SEO.

Duplicate content is unavoidable. As your website expands, your CMS, URL parameters, or blog taxonomies (categories, tags, archives) can generate unintentional duplicates—often slipping under the radar.

The key isn’t eliminating duplication entirely, but managing it effectively. By following best practices, you can prevent ranking losses, avoid SEO pitfalls, and keep your organic traffic intact.

Struggling with duplicate content issues? FoxAdvert can help. With advanced tools, expert insights, and proven SEO strategies, we ensure your content ranks where it should—without competition from itself.